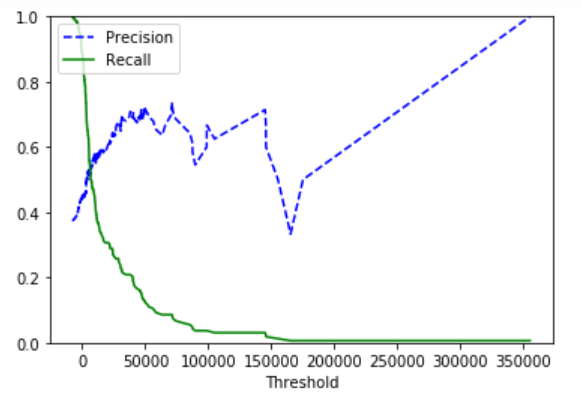

Threshold Which Gives You the Best F1_score

Below we calculate the F1 Score for each threshold 001 002 003099. F1_scores 2recallprecisionrecallprecision printBest threshold.

Understanding Performance Measures For Classification Problems By Nash Medium

The match score is also by used InsideView Data Integrity InsideView Enrich InsideView Refresh and InsideView Diagnose products to return a quality matched.

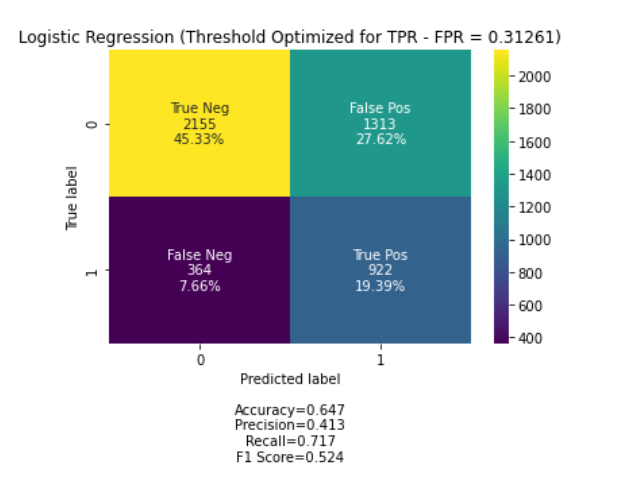

. Where y_test_prob best_threshold 1 0. This gives you some intuition. The threshold that would produce this outcome from the algorithm is 03126109044627986 Automating the Threshold Calculation Take 2.

I actually wrote my first paper in machine learning on this topic. The below function iterates through possible threshold values to find the one that gives the best F1 score. Find the threshold value that gives the best F1 Score.

Thresholdsnpargmaxf1_scores printBest F1-Score. F 1 2 T P 2 T P F P F N. If it is a cancer classification application you dont want your threshold to be as big as 05.

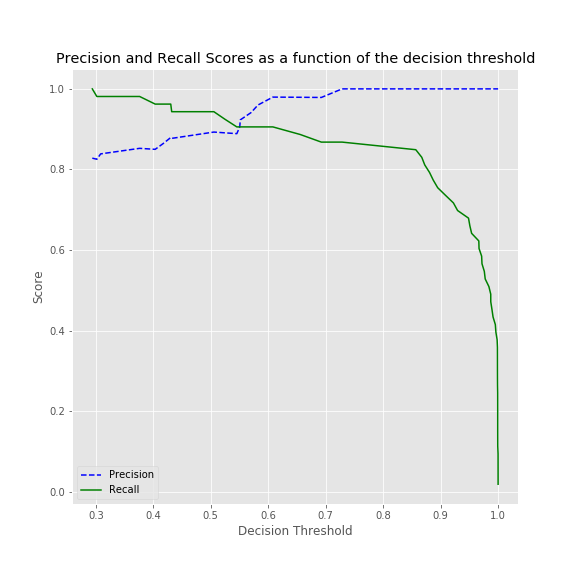

In the figure above we see the visualizer tuned to look for the optimal F1 score which is annotated as a threshold of 043. For instance all the points with an F1-score of 05. This answer is not useful.

Use Adopted Threshold When Making Class Predictions on New Data. Just use the NumPy functions to find the threshold that maximizes the F1-Score. We will take a look at some of the most common examples in the following sections.

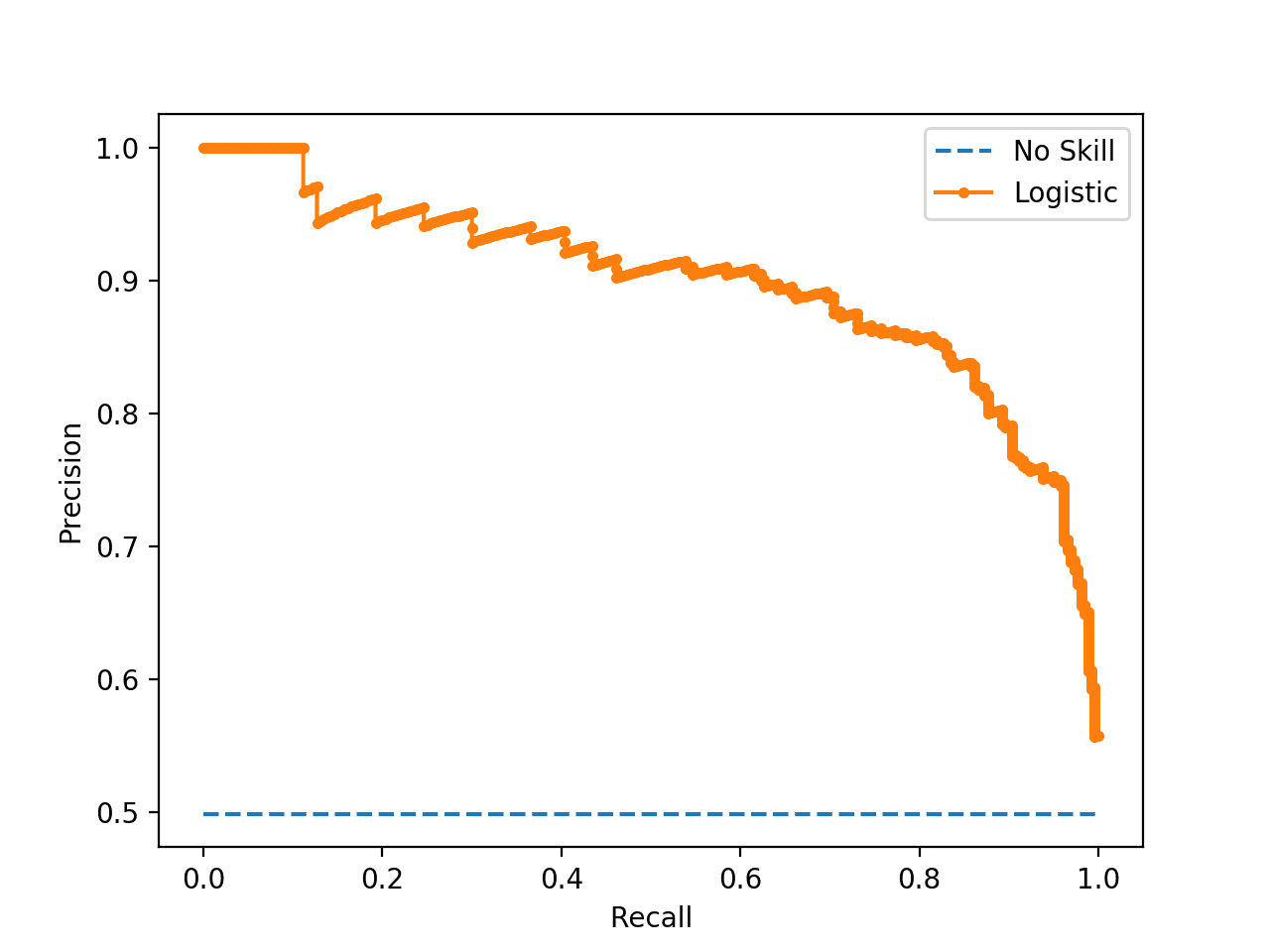

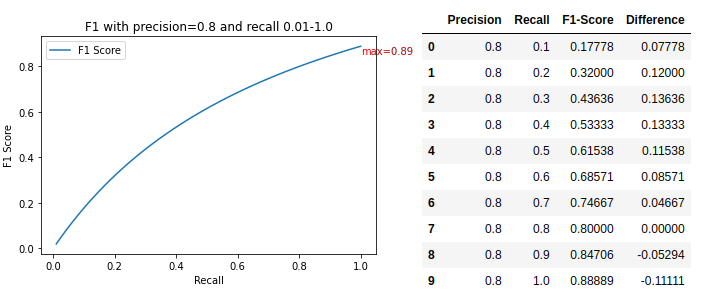

If you want the best tradeoff it helps to visualize the tradeoff with isocurves. The downside is that we lose some ground in how well our model correctly predicts HasAns examples. F1 Score is defined as the harmonic mean between precision and recall see more here.

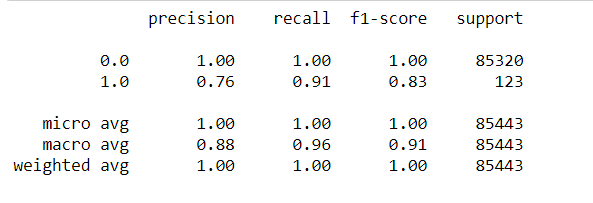

The threshold that gives the optimal cutoff optimal F1 Score is 32 or 32. In it we identified that when your classifier outputs calibrated probabilities as they should for logistic regression the optimal threshold is approximately 12 the F1 score that it achieves. Micro average macro average and.

One method of accomplishing this is to choose the threshold that optimizes the F1 Score. The optimal empirical threshold will lie between two scores that include the value F2 when the scores are calibrated in accordance with Theorem 1. Although simple there are a few different approaches to implementing threshold-moving depending on your circumstance.

For any classifier that produces a real-valued output we derive the relationship between the best achievable F1 score and the decision-making threshold that achieves this optimum. Achiev able F1 score and the decision-making threshold that achieves this optimum. This is the reason why hyperparameters tuning like GridSearch and RandomizedSearch in python.

When we used the default threshold of 10 we saw that our NoAns_f1 score was a mere 636 but when we use the best_f1_thresh we now get a NoAns_f1 score of 75 - nearly a 12 point jump. Isocurves help you visualize any scoring or cost function like F1. Your holdout set is just there to double confirm that whatever has worked on your training set will generalize to images outside of the training set.

The threshold s that classifies all examples positive and maximizes F1 analytically by Theorem 2 has an empirical F1 value close to its expectation of 2 b 1 b 2 1 1 b since tp fp and fn are all estimated from the entire data. Here we can see that the optimum ie. For any classifier that produces a real-valued output we derive the relationship between the best achievable F1 score and the decision-making threshold that achieves this optimum.

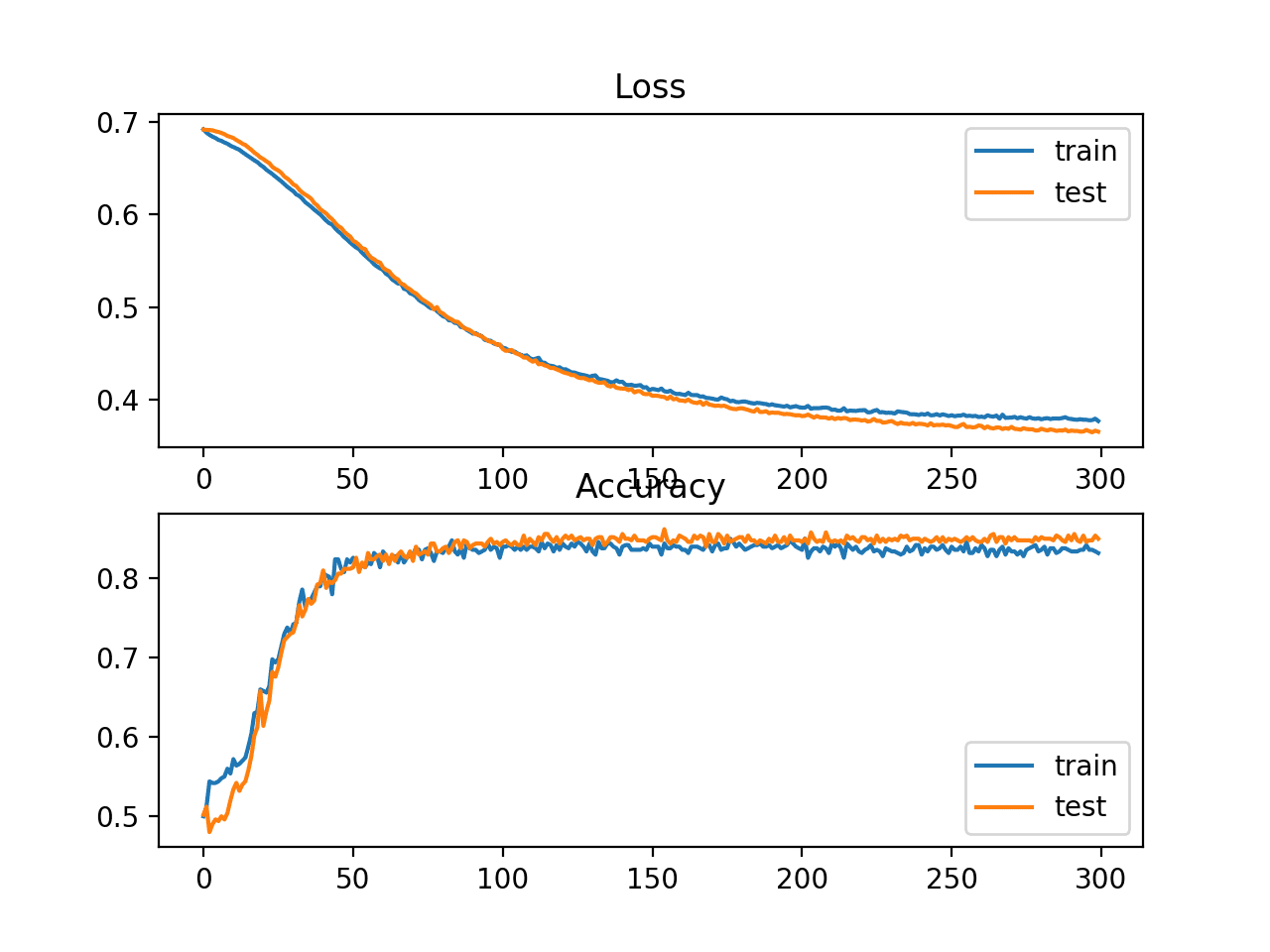

The model is run multiple times over multiple traintest splits in order to account for the variability of the model with respect to the metrics shown as the fill area around the median curve. Even if a patient has a 03 probability of. If Score is Better than Best Score.

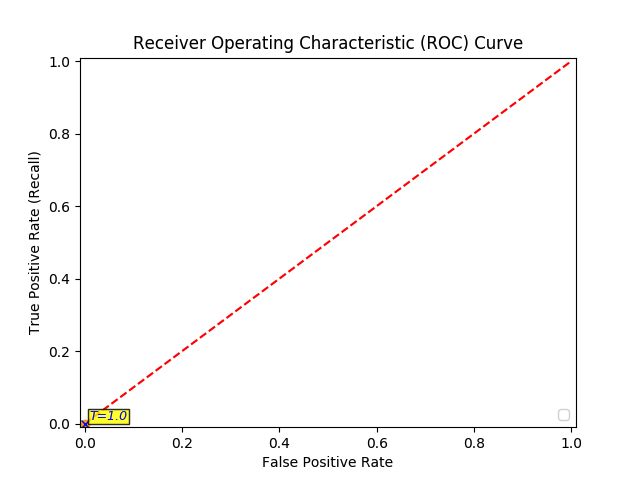

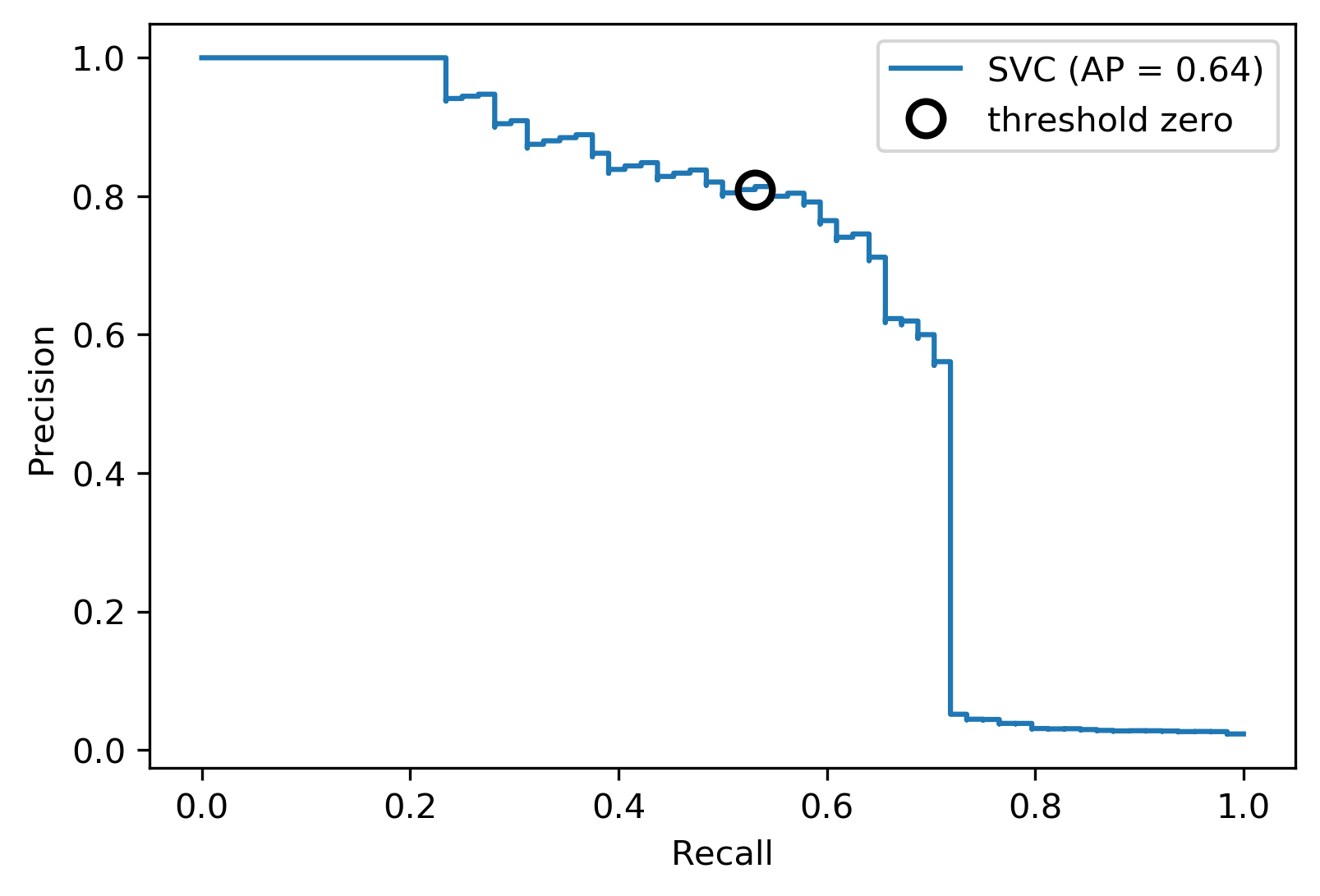

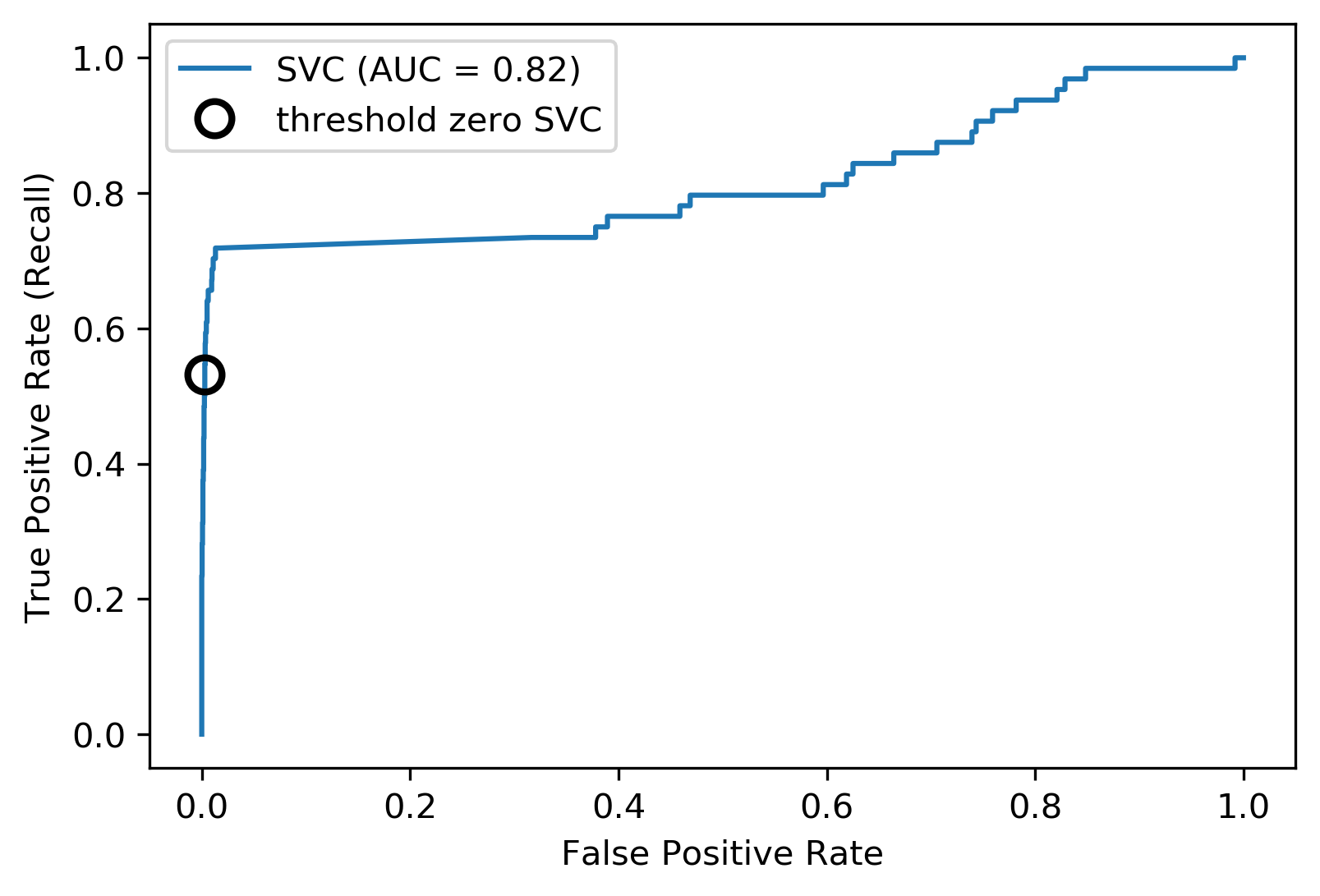

The moment you choose any specific threshold in precision recall F1 etc you have left the realm of the AUC scores ROC or PR altogether - you are in a single point on the curve and the average area under the curve is no longer useful or even meaningful. Also you can set this threshold to 08 or other value from 01. For example you can assume that if probability is greater than 05 then sample belongs to class 1 and 0 otherwise.

After calculating the precision recall and threshold scores you get NumPy arrays. The maximum F1 score is 05237148537347345 with the optimum precision and recall being 041 and 072 respectively. In each case you will have different True PositiveNegative and False PositiveNegative numbers.

Show activity on this post. As another special case if the. Each fold generates its own F1-score then I average all 10 F1-scores to produce the mean F1-score.

Match scores provide insight into the quality of a matched record. Ideally the threshold should be selected on your training set. As a special case if the classifier outputs are well-calibrated conditional probabilities then the optimal threshold is half the optimal F1 score.

The harmonic mean of precision and recall F1 score is widely used to measure the success of a binary classifier when one class is rare. A match score is a number from 1 to100 that indicates the likelihood that a match obtained by InsideView is accurate. And precisionrecallf1-score will be different.

As a special case if the classifier outputs are well-calibrated conditional probabilities then the optimal. Argmax calc_f1 p_r for p_r in zip precision recall best_threshold best_precision best_recall threshold best_f1_index precision best_f1_index recall best_f1_index Calulcate predictions based on the threshold value. Overall however we see a net increase of a couple points in both EM and.

When you want to choose the threshold that fits the business problem. Highest score default Date modified newest first Date created oldest first This answer is useful. An iso-cost curve or indifference curve depicts a set of points that have the same cost or metric value.

Show activity on this post. The optimal threshold will never be more than 5. As a special case if the classifier outputs are well-calibrated conditional probabilities then the optimal threshold is half the optimal F1 score.

The question is if I select an optimal threshold for each folds F1-scores and then find the average of all F1-scores which should definitely give me a better result in comparison to the threshold05 would that be considered over-fitting.

Recall Precision F1 Roc Auc And Everything By Ofir Shalev Ofirdi The Startup Medium

Classification On Imbalanced Data Using Scikit Learn Important Gaps To Avoid By Sundar Rengarajan Medium

How To Calculate Precision Recall F1 And More For Deep Learning Models

A Look At Precision Recall And F1 Score By Teemu Kanstren Towards Data Science

Machine Learning Calculating The Number Of True Positives From A Precision Recall Curve Stack Overflow

Evaluation Metrics And Scoring Applied Machine Learning In Python

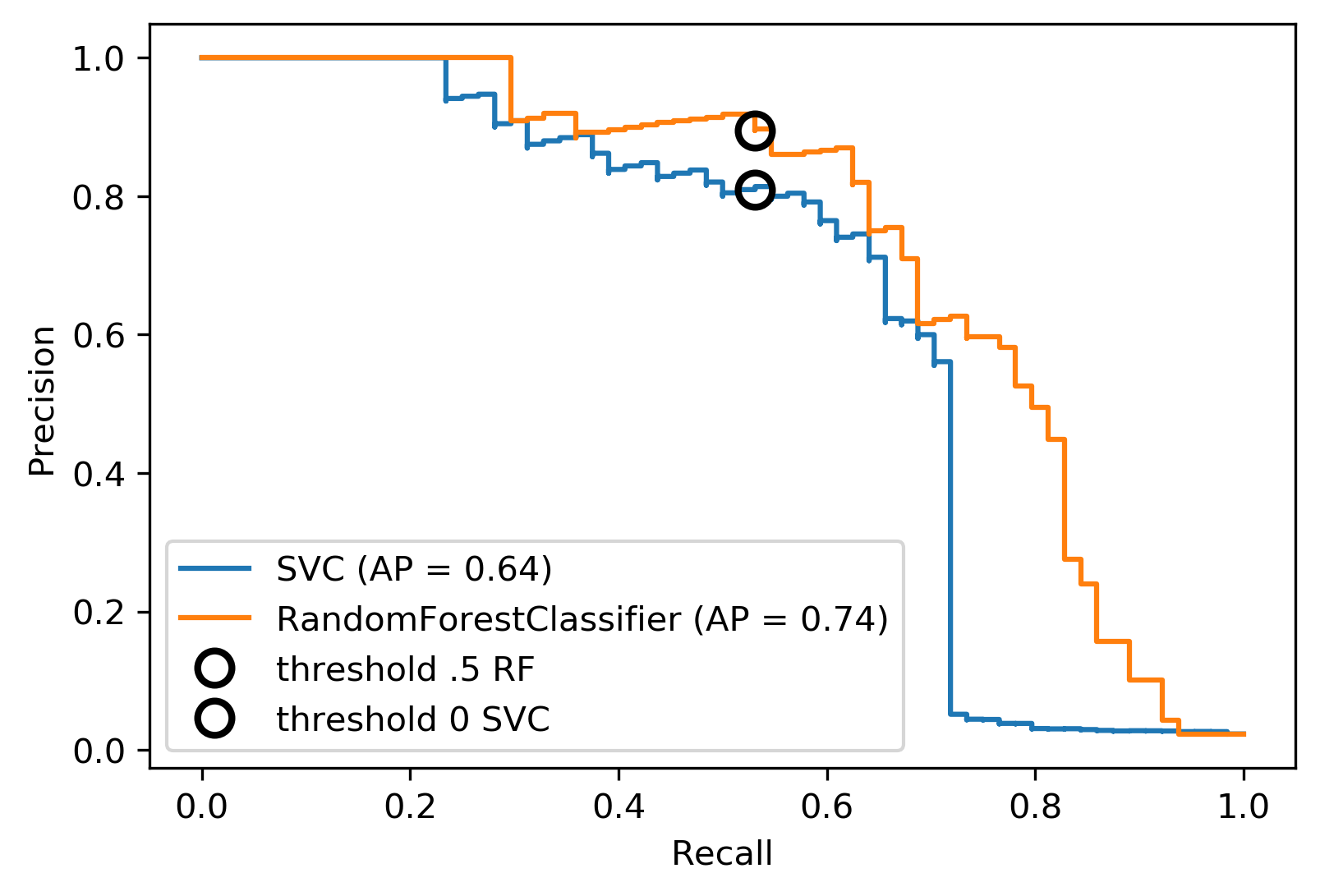

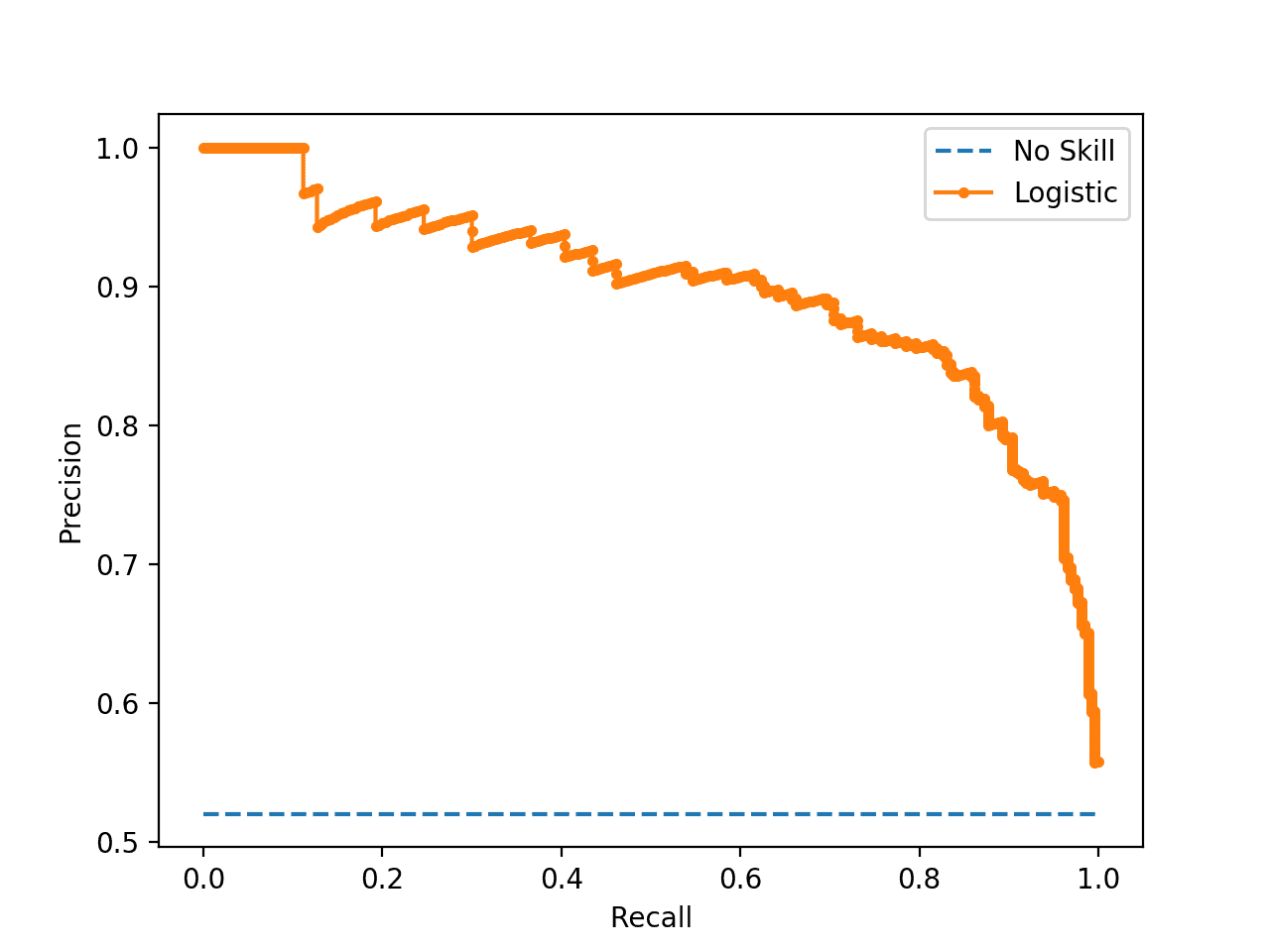

Calculating And Setting Thresholds To Optimise Logistic Regression Performance By Graham Harrison Towards Data Science

Evaluation Metrics And Scoring Applied Machine Learning In Python

Evaluation Metrics And Scoring Applied Machine Learning In Python

Pdf Thresholding Classifiers To Maximize F1 Score

What Is The Best Metric Precision Recall F1 And Accuracy To Evaluate The Machine Learning Model For Imbalanced Data

How To Use Roc Curves And Precision Recall Curves For Classification In Python

Decision Trees Xgboost For Binary Classification Choosing The Right Threshold Data Science Stack Exchange

Fine Tuning A Classifier In Scikit Learn By Kevin Arvai Towards Data Science

Recall Precision F1 Roc Auc And Everything By Ofir Shalev Ofirdi The Startup Medium

Fine Tuning A Classifier In Scikit Learn Kaggle

How To Calculate Precision Recall F1 And More For Deep Learning Models

How To Calculate Precision Recall F1 And More For Deep Learning Models

Comments

Post a Comment